ActorCore & iClone integrated with EEVEE real-time in Blender

In the burgeoning realm of metaverses and the emergence of virtual events on these platforms, we find ourselves delving into immersive experiences, surrounded by avatars who are interconnected through virtual reality. However, the key to designing successful events lies in the integration of genuine human connections within these virtual spaces, necessitating a sturdy and lifelike framework to deliver impactful virtual content. This is precisely where ActorCore comes into play—an indispensable resource for procuring top-tier 3D assets, including lifelike human models, animations, motion capture capabilities, and real-time rendering, all conveniently accessible via the web.

It's the boundless prospects offered by this remarkable tool that have captured my attention. Coupled with iClone 8 , it provides unparalleled flexibility and simplicity in seamlessly incorporating crowd animations and staging for the web metaverse.

In the following sections, I will share my endeavors in Blender, where I've worked on integrating realistic individuals into a 3D environment simulating a party, achieving exceptional levels of visualization and detail.

So, let's embark on this journey and delve into the process!

About the Artist

I am José Ripalda, a Video Game Designer, and Metaverse Artist, serving as the Co-Director of Marco Virtual MX. Our dedicated creative team focuses on delivering top-notch experiences within virtual events and the metaverse. Leveraging Blender as my primary tool, I craft diverse thematic environments tailored for web performance.

I am José Ripalda, a Video Game Designer, and Metaverse Artist, serving as the Co-Director of Marco Virtual MX. Our dedicated creative team focuses on delivering top-notch experiences within virtual events and the metaverse. Leveraging Blender as my primary tool, I craft diverse thematic environments tailored for web performance.

At Marco Virtual MX, our business model revolves around providing premium digital services for advertising purposes. Among our offerings are comprehensive 3D environment designs for the metaverse, video games, and various visual media. Moreover, our specialization lies in seamlessly integrating 3D content into the web using WebGL. We also excel in developing bespoke interactive systems for clients seeking personalized digital spaces. Furthermore, our expertise extends to crafting digital replicas of individuals, achieved through scanning or meticulous modeling. These services collectively define our role as a creative team dedicated to harnessing 3D technology.

Behind the Scenes

This tutorial will cover the entire process, commencing with ActorCore. It will elucidate the diverse possibilities of visualizing actors or 3D assets directly on the web, showcasing their animations and accessories. Subsequently, we'll proceed to acquire these assets, integrating them seamlessly into our library within iClone 8. This integration marks the pivotal step in assembling multiple actors within a virtual space, which could be perceived as a stage or a setting where the events will unfold.

Throughout our exploration of iClone 8, we'll delve into various tools, including Motion Layer, a comprehensive system designed to facilitate seamless actor control. Additionally, we'll discover the functionality of the Timeline, which enables the amalgamation of motions, along with other remarkable features tailored to animate with meticulous attention to detail.

Moreover, as part of our supplementary learning, we'll delve into the CC Auto Setup for Blender. This tool significantly streamlines the process of importing animated actors from iClone to Blender, enhancing the efficiency of our workflow.

ActorCore Animated 3D Characters

Initially, my aim in orchestrating virtual events revolves around sourcing lifelike performances from ActorCore's expansive repertoire. First, I seek actors in the midst of skillful dancing or lively conversation, followed by a DJ responsible for overseeing the DJ booth. Lastly, I enlist waitstaff to curate a personalized and intimate ambiance for the event.

Following this introduction, a comprehensive breakdown of the ActorCore interface showcases the array of options available for discovering and previewing actors. This interface allows users to manipulate various visual elements, including lighting and selected animations.

Through this web interface, we have the capability to designate selected actors as "FAVORITES" for the party. Leveraging ActorCore's extensive library, I effortlessly located all necessary components characterized by their realistic appearance, PBR shading, and readiness for animation through the Motion Animation library. This vast selection ensured that everything I required was readily available.

After preparing our selected actors, the next step involves downloading them for integration with Character Creator or iClone. This seamless integration capability enables us to effortlessly incorporate these actors into various Reallusion systems, game engines, and 3D applications—specifically, Blender.

An important aspect to emphasize here is the actors' optimization for seamless integration into the metaverse. Each actor is tailored with an intermediate polygon count ranging between 15K-20K, flexibly accommodating events hosting multiple actors without significantly impacting performance. Furthermore, iClone 8 offers a streamlined method to import these actors directly from ActorCore. Its robust and efficient system ensures a swift and solid process, requiring just a few clicks for acquisition and integration.

iClone Advanced Motion Editing

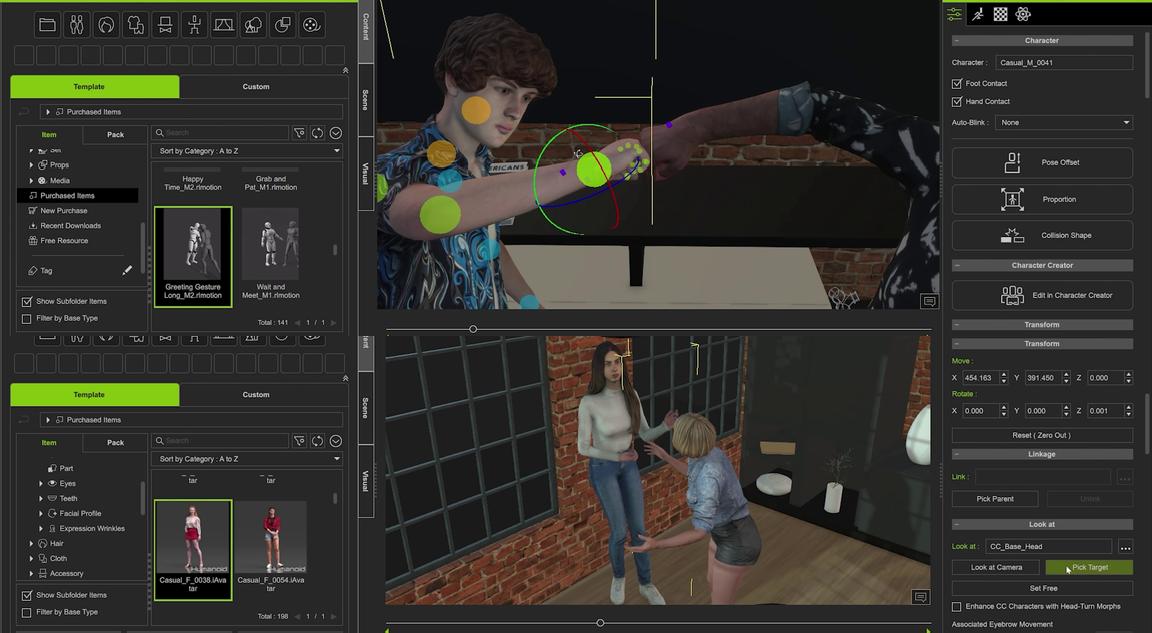

Incorporating animations is as effortless as a simple drag-and-drop action onto the desired avatar, offering a visual interface that expedites the process without the need for extensive, time-consuming steps. This intuitive system not only enables users to seamlessly adjust the position and orientation of the actor but also ensures steady foot-and-floor contact. Initiating the animation setup with an established position facilitates better control, allowing users to define precisely where the actor will commence the animation sequence from the outset.

After grasping the process of integrating actors into iClone 8, the subsequent crucial step for our event is to incorporate the apartment stage. I highly suggest utilizing FBX files (including textures) to ensure seamless importation into iClone 8 without encountering any issues.

Once the stage is set, the process becomes straightforward—simply select and drag our actors to designated areas, such as the DJ section, which is prepped with mixing equipment. This setup allows us to effortlessly test out the Motion Layer tool for smooth execution.

The Motion Layer represents an innovative system seamlessly integrated into iClone8, offering meticulous control over every aspect of our actor's performance. This includes configuring individual finger movements, manipulating hand gestures such as opening and closing, editing various body parts, transitioning between IK and FK seamlessly, and accessing a comprehensive panel for selecting specialized gizmos.

In addition to working with the DJ, I managed to implement specific modifications for two guests engaging in a handshake. Motion Layer makes it easy to adjust hand positions. Moreover, within this tool, we explore a practical method enabling actors to maintain eye contact while conversing. Under the ‘LOOK AT’ menu, options like Look at Camera or Pick Target can significantly enhance the realism of our scenes by facilitating natural gaze and interaction between characters.

Furthermore, beyond the guest actors, it's important to integrate featured actors. In a recent scenario where I required waiters, I found ActorCore particularly useful. Specifically, the "CATERING SERVICE STAFF" set offers a diverse range of actor types ideally suited to serve as waitstaff for our virtual party.

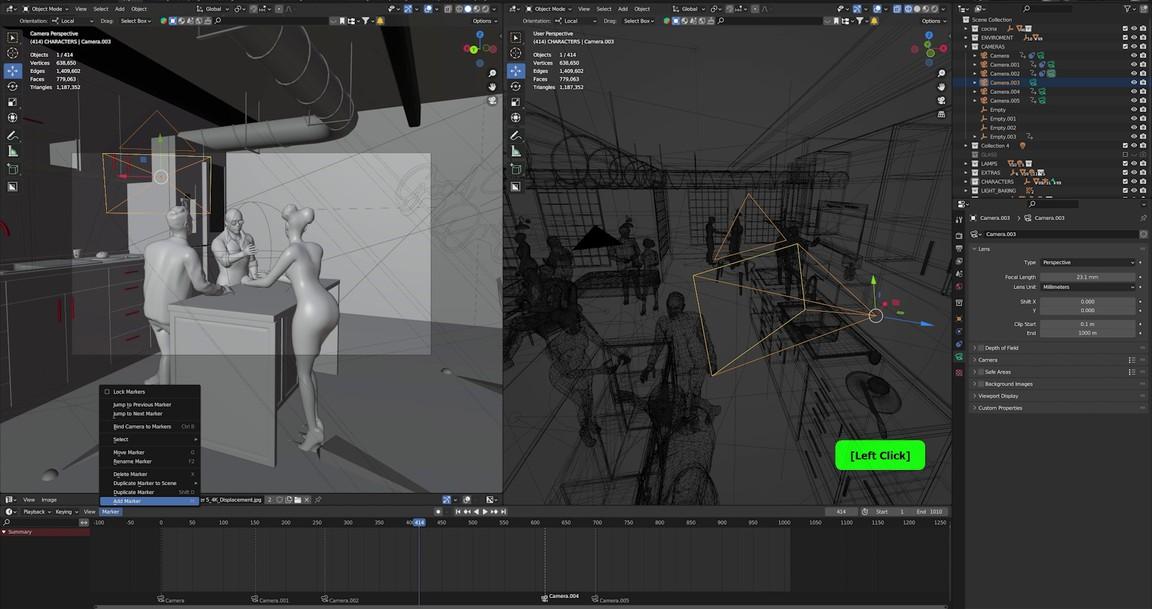

Once all our actors are prepared and animated using the tools showcased in the video, a crucial step involves transferring the actors and animations to Blender (the program chosen for creating this virtual scene). In iClone 8, I opted to export all avatars in FBX format, selecting the "BLENDER" preset and configuring a comprehensive array of animation and other parameters as depicted in the following image.

Stunning Rendering Results in Blender

In under 5 minutes, I efficiently grouped each avatar, seamlessly integrated textures, animations, and more. While this process is undeniably efficient, I'd like to delve into some aesthetic nuances I implemented during the creation of the apartment in Blender.

Blender offers unparalleled flexibility and practicality in crafting virtual environments, allowing for effortless texturing, lighting manipulation, and the creation of an extensive camera system with easy control. In my case, aiming for a realistic yet swiftly generated rendering, I utilized techniques like "Irradiance Volume" and "Reflection Cubemap," both highly effective within EEVEE. These, combined with effects such as bloom and ambient occlusion, strike a fine balance between achieving good quality visuals and optimizing the overall realism of the scene.

Once our actors are primed, the Blender add-on called CC Auto Setup for Blender comes into play. This add-on allows for direct selection of the FBX files exported from iClone 8, simplifying the import process into Blender. Once imported, each avatar will be neatly separated with its corresponding ARMATURE and integrated animation. This practical feature significantly hastens various processes involved in animating the party scene.

The camera system integrated within Blender offers remarkable flexibility in capturing stunning shots that vividly depict the virtual event, providing a clear preview of its appearance within the metaverse. Regarding the equipment used for this work, it comprised a single workstation equipped with an i9 12900KF processor and an RTX 3080 graphics card. I'm delighted to have explored these fantastic tools offered by Reallusion, and I eagerly anticipate continuing to share and learn from experiences with such excellent and practical platforms. Thank you for joining in this exploration of innovative possibilities!

Conclusion

ActorCore and iClone complement each other perfectly, offering a seamless integration of animations and lifelike characters ideal for virtual events or VR metaverse settings. The addition of CC Auto Setup for Blender further enhances this workflow, swiftly importing renderable, animated characters into Blender, eliminating the complexities of additional material settings. This intuitive process doesn't require specialized technical expertise, making it accessible for all. I wholeheartedly endorse this workflow for anyone looking to create immersive VR metaverse productions, as it simplifies the process while ensuring impressive results.

![Animated 3D Events for the Metaverse, Easier than ever [$]](https://www.blendernation.com/wp-content/uploads/2023/12/Blender-Nation_Commercial-posts_1456x672-728x336.jpg)

![Animated 3D Events for the Metaverse, Easier than ever [$]](https://www.blendernation.com/wp-content/uploads/2022/12/10cubes-BN-article-351x185.jpg)

![Animated 3D Events for the Metaverse, Easier than ever [$]](https://www.blendernation.com/wp-content/uploads/2023/12/Header-1-1-351x185.jpg)